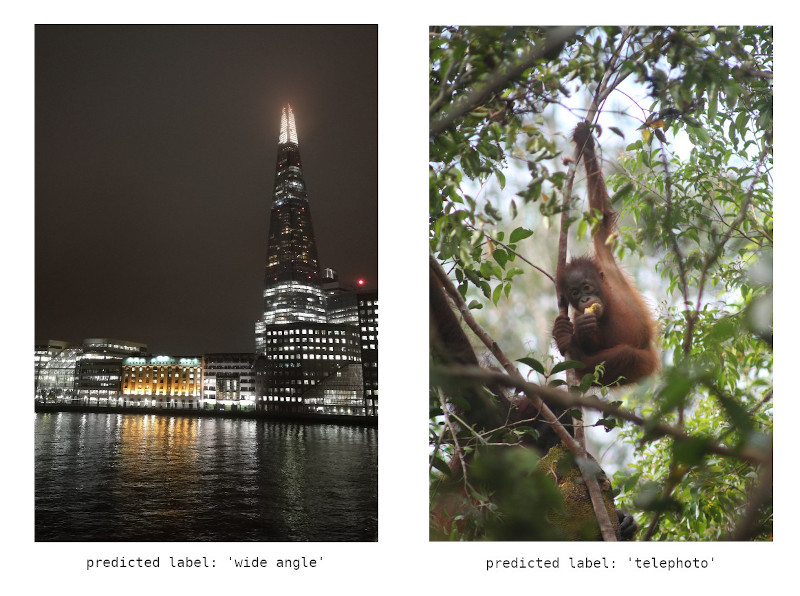

Does computer vision apprehend the world through a specific lens? And if so, which one? In this project I set out to design a deep learning classifier that is purposefully blind to what photographs are of; a type of machine vision that cares nothing about recognising objects, people or scenes, and is instead programmed to learn only about how photographs were made, more specifically the lenses used in their making.

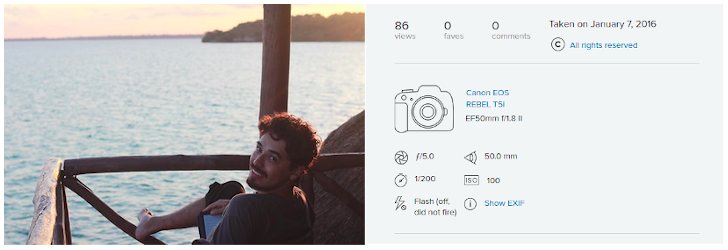

This project uses Flickr IDs from the Visual Genome dataset (used in Made by Machine), and extracts their EXIF metadata through Flickr’s API. The model was trained using a PyTorch implementation of a vanilla VGGNet. This is how EXIF info looks on Flickr:

This is an (abbreviated) example of Flickr’s API response:

[

{ 'label': 'Make',

'raw': {'_content': 'Canon'},

'tag': 'Make',

'tagspace': 'IFD0',

'tagspaceid': 0},

{ 'label': 'Model',

'raw': {'_content': 'Canon EOS REBEL T5i'},

'tag': 'Model',

'tagspace': 'IFD0',

'tagspaceid': 0},

{ 'clean': {'_content': '50 mm'},

'label': 'Focal Length',

'raw': {'_content': '50.0 mm'},

'tag': 'FocalLength',

'tagspace': 'ExifIFD',

'tagspaceid': 0},

{ 'label': 'Lens Model',

'raw': {'_content': 'EF50mm f/1.8 II'},

'tag': 'LensModel',

'tagspace': 'ExifIFD',

'tagspaceid': 0},

]

And this is an example of the resulting dataframe:

| Camera manufacturer | Camera model | Exposure | Aperture | Focal Length |

|---|---|---|---|---|

| Canon | Canon PowerShot S2 IS | 1/640 | f4 | 72mm |

| Panasonic | DMC-FX9 | 1/640 | f3.6 | 10mm |

| Canon | Canon EOS 20D | 1/1200 | f11 | 560mm |

| Nikon | NIKON D50 | 1/250 | f5 | 125mm |

| Canon | Canon PowerShot SD600 | 1/800 | f2.8 | 5.8mm |

| … | … | … | … | … |

This proof of concept was published as part of the article On Machine Vision and Photographic Imagination (with Tobias Blanke) in the journal AI & Society. See my publications for more.

🖥️ Code and 💾 data for this project are available in this repository.