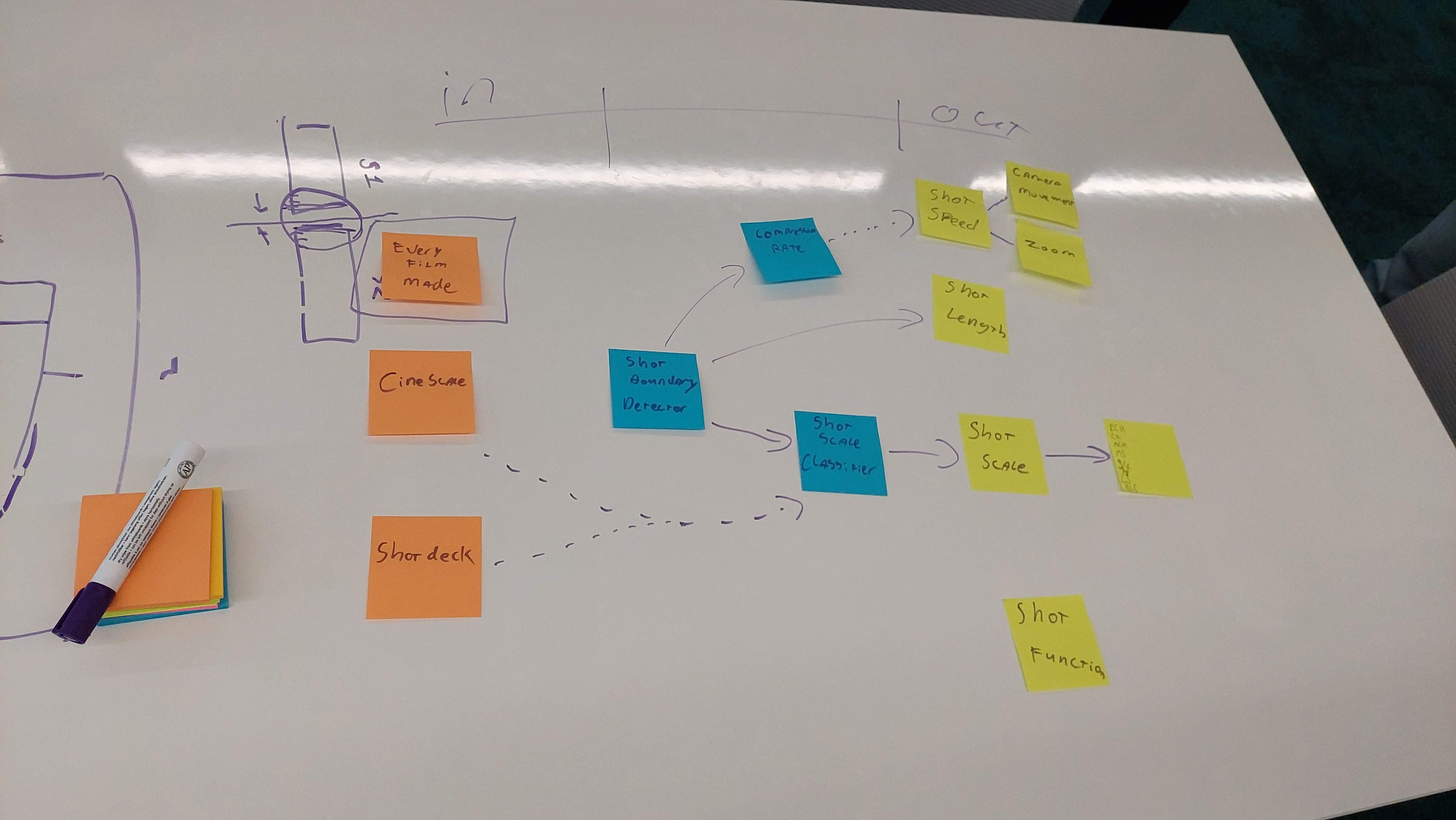

This strand on table 3 focused on cinematic time at the most local level: from shot to shot and even frame to frame; a kind of computational poetics of moving images, which is probably the closest to my own work. We discussed more or less established methods to calculate cutting rates, such as average shot length (see for example cinemetrics and this shorter piece by Stephen Follows), and their automation using techniques such as shot boundary detection.

One of the ideas that emerged from these discussions is the subjective experience of film at a more perceptual (phenomenological?) level, and the language we use to describe that kind of experience, e.g. fast or slow. Stephen elaborated on this and got us to ask if there might be a correlation between fast subjects and fast cutting rates, or between editing styles and the perception of time on screen.

What would it take to engineer a fast-slow signal from sequences of frames? How slow is slow cinema?

I mentioned the method we used to calculate the “energy” section of the Made by Machine BBC programme in 2018, which basically consists on reverse engineering compression. I found some of my writing on the idea and some experiments I ran back my PhD days. And Carlo Bretti ran an implementation of shot scale detection during day two of the workshop.